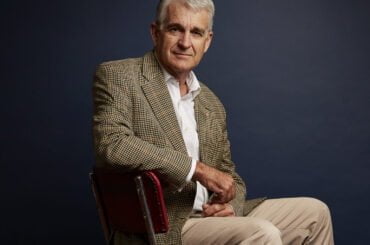

Conversations: with John Lennox, Emeritus Professor of Mathematics at Oxford University

John is joined by mathematician, bioethicist and Christian apologist Professor John Lennox for a profound conversation centered on the current and future impacts of artificial intelligence technology.

Transcript ShowHide full transcript

Chapters

- Introduction

- Do we find truth in science?

- Why is secularism on the rise?

- What is Narrow Artificial Intelligence?

- China’s Social Credit System

- Rapid AI development

- The Dangers of Artificial General Intelligence (AGI)?

- The 2 alarming agendas of the 21st century

- Humanity’s desire for immortality

- What is true faith?

- Conclusion

John Anderson: It’s an extraordinary privilege for me to be in Oxford and able to talk personally to Professor John Lennox Emme, professor of mathematics at the University of Oxford for years, a professor of mathematics at the University of Wales in Cardiff. He’s extensively all over the world. He’s written widely, interestingly, has spent a lot of time in Russia and Ukraine after the collapse of.

Communism and it’s deeply grieved to see what is happening there. And the idea that young men on both sides, that, that he and others have taught and mentored may now be fighting one another into the dust in these dangerous times in which we live. But amongst his many writings, he’s, uh, given us, gifted us.

A very useful book. He tells me he’s already updating it on artificial intelligence and the future of humanity called 2084, which says a lot in the sense that we all know about 1984. I think you’re telling us that there some troubling things coming up. John, thank you so much for your time.

John Lennox: It’s my pleasure to be with you.

Do we find truth in science?John Anderson: Can we begin over the past two years? During the Covid pandemic, but also with climate change. We hear this phrase a lot in Australia and it seems internationally. Trust. The science strikes me that in our allegedly secular age, trust, and faith are still seen as pretty important. We haven’t walked away from them.

Do you think those who are accused of not trusting the science are frequently seen as somehow rationally and even morally deficient in an age of crisis? Is science becoming a new savior? Inverted commas?

John Lennox: Well, trusting the science is is fine if it’s kept to the things at which science is competent, but.

Unfortunately, over the past few years, there has developed a trust in science that we now call scientism, where science is regarded essentially as the only way to truth, the only option for a rational thinking person and everything else is fairy stories and all the rest of it. And I take a great exception to that because it’s plainly.

False. It’s false logically because the very statement, science is the only way to, truth is not a statement of science. And so if it’s true, it’s false. So it’s logically incoherent to start with, but uh, going a little bit more into it. It has had huge influence because of people like the late Stephen Hawking, for example, who wrote in one of his books, he, he said that philosophy’s dead.

And it seems now as if scientists are. Holding the torch of truth and that’s, that’s scientism. The irony of it is, of course, that he wrote it in a book where it’s all about philosophy of science and it’s pretty clear that Hawking brilliant as he was as a mathematical physicist, really is a classic exemplar of what.

Albert Einstein once said, the scientist is a poor philosopher, and my response to it is very much would be couched in the kind of attitude that Sir Peter Meir, and he’s a Nobel Prize winner in Oxford here, once wrote, he said, it’s so very easy to see. That science, meaning the natural sciences, are limited in that they cannot answer the simple questions of a child.

Where do I come from? Where am I going to, and what is the meaning of life? And it seems to me immensely important that we recover that. And what metaphor went on the SA say is we need literature. We need philosophy, and we need theology as well, in my view, in order to answer the bigger questions. Now the late.

Lord Sacks a brilliant philosopher. He was the Chief rabbi of the [00:05:00] UK and the Commonwealth and so on.

John Anderson: And one of the guests on this series,

John Lennox: and one of the guests on this series. Well, I’m delighted to hear it. He once wrote a very pithy statement that I found very helpful. He said, you know, science takes things apart to understand how they work, and I suppose to understand what they’re made of.

Religion puts ’em together to see what they mean. And I think that encapsulates the danger in which we are standing. Science has spawned technology. We’ve become addicted to technology, particularly the more advanced forms of it, like AI in my book, like Virtual Reality, the Metaverse, all this kind of stuff.

We’ve become addicted to it. But we’ve lost a sense of real meaning, and in particular, we’ve lost our Moral com. Compass Einstein again, to quote him, made the point long ago, he said, you can speak of the ethical foundations of science, but you cannot speak of the scientific foundations of ethics. Science doesn’t tell you what you ought to do.

It’ll tell you. Of course, if you put strict name in your granny’s tea, it will give her a very hard time. In fact, it’ll kill her, but it can’t tell you whether you ought to do it or not to get your hands on, on her property. And so we’re left. In a scientific moral vacuum. Uh, therefore I feel very strongly that as a scientist of sorts, I need to challenge this.

Science is marvelous, but it’s limited to the questions it can handle, and let’s realize it does not deal with the most important questions of life. And they’re the questioner. Who am I? What can life and does life mean? And where do we get a moral compass?

Why is secularism on the rise?John Anderson: Before we come to artificial intelligence, then I’d just like to explore what you’ve been talking about a little bit with reference to Britain.

Uh, I’ve love history. I’ve, I’ve always massively admired Britain and, and I know Britain seems to be into self-flagellation on just about every issue you can think of at the moment that the crying of its own cultural roots. But to my way of thinking, I think in many ways Britain’s been a force for unbelievable good.

In the world. I really do. I mean, as an Australian, I would not live in a free country if it hadn’t been for the prime minister of this country standing up when no one else did in 1939. Just one minor example. But I come here now and I wonder just what the British people believe in. So massively shaped by, by Christian faith.

Arguments sometimes very ugly over a long period of time, but nonetheless, profoundly shaped. The times reported just a couple of years ago that we’ve reached the point where 27% of Britain’s believing God with an additional 16% believing in a higher power among the British as a whole. 41% say they believe there is neither a God or a higher power.

Interestingly, um, those in the uk, uh, young people, the number who said they believe in God Rose A. Little. Um, And, uh, nonetheless, what you’ve got here is one of the most secular societies on Earth, which not so very long ago was one of the more Christian, uh, what’s responsible, is it tied to a sort of false.

Faith in science amongst other things? Or is it just it’s too hard? Uh, or is it that, um, the wars have, uh, seen, uh, people convinced that you saw two Christian nations fighting, pray, people praying to the same God for victory? How did it. Morph so badly to a state of unbelief. Do you think the country that you’ve lived in your life?

John Lennox: I find this a complex and difficult issue because I see different strands in it.

If you pick up on the science side, you go back to Isaac Newton and he gave us a picture of the universe that was very much what’s called a clockwork picture. The universe running on fixed laws, uh, that where according to Newton, originally set in place by God. But it was a universe that essentially now ran on its own.

And you can see that that in the 18th century particularly favored what’s called deism. That is there is a God, but he’s hands off. He started it running. And now it runs and it runs very well. And you can see with that in the collective psyche, particularly in the academy, it very rapidly led to questions of, is God really necessary?

Now you add that to what was happening in the continent [00:10:00] were the enlightenment and the corrupt. Church professing Christianity, utterly corrupt. And the reaction against that, which was fuel to the fire really, of a rising secularism and atheism. And then you add to that what was happening in the days around the time of Charles Darwin, where you had Huxley, uh, who was an atheist, and he resented.

These clergymen who were actually some of them very good natural philosophers. Like Wilberforce actually was a much brighter man than many people think, as Darwin pointed out. But Huxley in the uk, he wanted a church scientific, he wanted to turn the churches into, um, um, Uh, temples to the goddess Sophia of wisdom, that, that kind of idea.

So you’ve got all of that, and then you add to that the. Vitriolic anti-God sentiments, uh, that are not just atheism, but anti-God failing, led for quite a long time by Richard Dawkins and other people. And that’s had huge influence, uh, on young people’s. One of the reasons I entered the fray actually, because the media then come into this, it’s even more complicated because within the media, the dominant view, And I think the B B C actually stated this at one time that they favored naturalism.

The philosophy that nature’s der is, and there’s no outside, there’s no transcendence, there’s no God. So you’ve got all of that and against it you have a group of people who are often code into. Letting their faith in God become private. This is the tragedy of secularism and. You get into that, the cancel culture, the woke culture, all this kind of stuff where I’ve got to affirm everything.

Everything’s equally valid that you, you’ve got relativism and postmodernism at least in things that people think don’t matter. You never meet a postmodern, uh, business person who goes to bank manager and says, I’ve got. $5,000 in the bank and the bank manager says, well actually, uh, you owe the bank 10,000.

No, that’s only your truth. No, that doesn’t work in the business world. But still you’ve got this pressure of relativism and so you end up as Michael Burke put it a few years ago, talking about faith in God in Britain. With the first generation that doesn’t have a shared worldview. Now there’s still a Christian influence, as even atheists recognize, but we’ve gone a long way in rejecting God and abandoning God.

And then there’s the entertainment industry that will fill everybody’s vacuum with noise. And we entertain ourselves to death. So your question is extremely complex and it would need a more observant person than me. To give you a full answer, it’s, it’s a huge mix of stuff, and any individual person may be in effectiveness in completely different ways.

What is Narrow Artificial Intelligence?John Anderson: The reason that it’s important, I think, to set that up is to, we now come to. What I really wanted to hear your views on artificial intelligence because science is giving us extraordinary capabilities. Yes. But will we simply be seduced by it in the sense that artificial intelligence is rapidly creating things that are marvelous, that we want to enjoy, that may satiate us, may Dulles.

While aspects of the emergence of AI could be very dangerous, but before we start to explore that for ordinary people in the street like me who are not living with this, well, we, I, I am living with this stuff, but dunno where it might go. We need to define some terms. What is ai? What’s. I think you call it narrow AI of the sort that we’re quite familiar with limited intelligence, but highly focused on, on, on, on narrow areas.

What is. Artificial general intelligence. And where might that go? There’s a whole number of issues. Then there’s, um, the whole issue of, um, of, uh, transhumanism. So can we start with very broadly, AI is what John, how would you explain it to a layman? We’ve all [00:15:00] heard the term.

John Lennox: No, sure. But Well, the first thing to realize is that the word artificial.

In the phrase artificial intelligence is real and that’s not due to me. It’s due to one of the pioneers of the subject who is happens to be a Christian. And the point is that and will take a narrow AI system first cause it’s much easier to explain. A narrow AI system is a system involving. High powered computer, a huge database and an algorithm that does some picking and choosing that whose output is something that it normally requires human intelligence to do.

That is, if you look at the output, you would say normally that it’s taken an intelligent person to do that. So let’s take an example that is very important these days in in medicine, and that’s x-ray in interpreting x-rays. So we have a database, let’s say it has 1 million. X-rays of lungs that are infected with various diseases, say related to covid 19.

They are then labeled in the database by the world’s top experts. Then they take an x-ray of your lungs or my lungs. And the algorithm compares the x-ray of your lungs with the million very rapidly, and it produces an output, which says John Anderson has got. That disease now at the moment, that kind of thing, which is being ruled out not only in radiology, but all over the place will generally give you a better result than your local hospital will.

And that’s hugely important and hugely valuable. But the point is the machine is not intelligent. It’s only doing what it’s programmed to do. The database is not intelligence. The intelligence is the intelligence of the people that design the computer, know about x-rays and know about medicine. But the output is what you would expect from an intelligent doctor.

So it’s in that sense, artificial, it’s a system narrow in the sense it only deals with one thing and all kinds, endless kinds of of systems are being ruled out around the world. And some of them, as you mentioned, are extremely beneficial. Narrow AI has been used in the development of vaccines, and the spinoff from that technology is enormous in drug development.

And on and on it goes. And I could give you dozens of examples and, uh, there in my book. So that’s, that’s where we start now. We are familiar with it, and it’s worth giving a second example of it because most of us, Voluntarily are wearing, first of all, a tracker. It’s called a smartphone. Yes, it knows where we are.

It could be even recording what we’re saying, but what it does do, of which we’re all aware is if we, for example, buy a book on Amazon will. Very soon get little pop-ups that say, people that bought that book are usually interested in this book. And what’s happening there is the AI system is creating a database of your preferences, your interests, your likes, your purchases.

And is using that to compare with its vast database of available things for sale so that it predicts what you might like. So this is of huge commercial value and. It leads to something else, which most of us don’t know about, and we can come to that later, but I’ll mention it now, which is called Surveillance Capitalism, and there’s a book by an erta professor at m I t called Susanna, and it’s regarded as a very serious book because the point she’s making is.

Global corporations are using your data and without your permission, are selling it off to third parties and making a lot of money out of it, and that raises deep privacy issues. So now you’re straight into the ethics. So that’s narrow ai. [00:20:00]

John Anderson: Okay, so let’s stay on narrow AI and extend our road a little bit further down towards broader use.

You’ve just talked about us being unaware of in a way of how we’re being surveilled. Yes. And it was right here in Oxford. I think it may have been you who made the point. I can’t remember, uh, in a talk that I heard where the point was made that. What’s happening in China Using artificial intelligence to surveil people is astonishing, but in many ways, all that information’s being collected in the West as well.

It’s just as not collated in the same way that

John Lennox: that’s correct. And, uh, this is perhaps one of the scariest aspects of it. What we’re talking about here is facial recognition by closed circuit television. Well, it starts with facial recognition, but we’ve now got to the stage where in China in particular, they can recognize you from the back, by your gate, by all kinds of things.

And what has happened is, and you can see the positive benefit, police want to arrest criminals. Or thugs or rowdies, even in a football crowd. And so using facial recognition technology, they can pick a person out and arrest him or her well, okay, but what can be used for good purposes in that sense, in keeping law and order can also become.

Particularly in an autocratic state, a common instrument of control, and here’s the huge dilemma which people try to solve. How much of your privacy. Are you prepared to sacrifice for security? There’s a tension between those two things. Now in China, you mentioned, and you’re probably thinking about S Xingjiang, where you’ve got a minority, a Muslim minority of Uyghur people, the surveillance level on them is, is unbelievable.

Every few hundred meters down the street, they have to stop. They have to hand in their smartphones. The smartphones are loaded with all kinds of stuff by the government. Their houses have QR codes outside them as to how many people live there and all this kind of thing. And I don’t know how many. It’s way over a million I believe.

Are being held as a result of what is being picked up by artificial intelligence systems in reeducation centers. And the suspicion is that the, the culture is being destroyed and eradicated.

China’s Social Credit SystemJohn Lennox: That’s the one hand that’s in one particular province, but elsewhere in China, we have now the social credit system that apparently will be rolled out in the entire country.

We are given, say you and I were given to start with, let’s say 300 social credit points. And we’re being trailed. If we, um, fail to put our rubbish, uh, trash can out at night, there’ll be marks against us. If we go to somewhere dubious or mixed with someone who’s political loyalties or suspect, we’d get more negative points.

On the other hand, if we pay our debts on time and. Go grain, so to speak, or all this kind of thing. We will amass more credit points and then if we are going negative, the penalties kick in. We’ll discover we can’t get into our favorite restaurant. We’ll discover we don’t get that promotion or don’t even get that job we apply for, or that we can’t travel or that we can’t even have a credit card.

And this is being ruled out. And the list of penalties and, and things that have actually been recorded is just. Uh, very serious. Uh, what amazed me when I first came across this was the fact that many people welcomed us. They think it’s wonderful. They’re both, I’ve got a thousand points. How many of you got?

And they don’t realize that the whole of life is becoming controlled in the interests ostensibly of having a healthy society. So it is. Talk about 1984. Now this is not futuristic speculation. This is already happening. George Orwell, you mentioned him who wrote 1984. He talked about Big Brother watching you.

And that technology would eventually, it is doing it. This is narrow ai. This is not futuristic in any way. It’s what’s actually happening at the moment. And you [00:25:00] mentioned briefly the fact that all this stuff exists in the West except. And the point has been made forcibly. It’s not quite yet under one central authority and control, but it is coming.

We have credit searches, we have all kinds of stuff that is beginning to creep in in the us and. In the UK and I presume also in Australia. And also we have even police forces here, I believe, who want the whole caboodle in here, want to be able to exert a much more serious level of control. And it is frightening because what it does for human rights is, is well, so,

John Anderson: so it occurs to me that, you know, I love history as I’ve mentioned.

Authoritarian regimes have collapsed under their own weight. Typically, the people have risen up one way or another, and there’s been an overturning. We’ve never had autocratic regimes that have had this surveillance capacity. There’s, you know, an estimated 400 million closed circuit television sets in China that that’s one for about every three people.

I mean, it’s mind boggling.

John Lennox: Oh, it is mind boggling. And even here in the uk what I’m told is that you’re, On a closed circuit TV camera every five minutes when you’re moving around. So it is very serious. And of course the irony is, as I hinted at earlier, here we are with our smart phones that have got all these capacities, certainly at the audio level, and we’re voluntarily wearing them.

So we’re voluntarily seating, uh, part of our autonomy and our rights really. To these machines when we don’t really know what has been done with all the information. So we have a huge problem and someone has said, we’re sleepwalking into all of this so that we’re captured by it, we’re imprisoned by it.

And we wake up too late because the central authority has got so much control that we cannot escape anymore.

Rapid AI developmentJohn Anderson: let’s go back to where I started. Science is blessing us because they are fantastic. A lot of these things, uh, you know, within incredible technology and capabilities that you’ve alluded to some of the useful things.

I mean, I, I love the way in which I can, in my car say, Hey, Siri. For my wife, yes. Just fantastic. But, but the p my, my, my question about what we now believe goes to the heart of who do we think we are? What is our status? On what basis will we be alert enough to recognize we need to make tough decisions?

And then on what basis will we make the ethical decisions around how far this goes? I know that’s a complicated question, but. There’s another element to where, because we haven’t even got into general artificial intelligence yet. We’re still talking. Mm-hmm. As I understand it, about narrow artificial intelligence, just masses of it.

Yes. We are those surveillance cameras, uh, and the people at their desks in Beijing, uh, you know, collating the information and what have you. There might be a lot of information and a lot of capability, but those cameras can’t think of another task. Uh, you know how to go and bring my boss a cup of coffee.

It’s still narrow.

John Lennox: That’s absolutely right. As before, we’ve got the general intelligence. Yes. And what we gotta realize, several things. First of all, the, the speed of technological development outpaces, ethical underpinning. Yeah. By a huge factor, an exponential factor. Secondly, some people are becoming, Acutely aware that they need to think about ethics.

Yeah. And some of the global players, to be fair, do think about this because they find the whole development scary. Is it going to get out of control? And someone made a very interesting point, uh, I think it was a mathematician who works in artificial intelligence and she was referring to the book of Genesis of the Bible and she said, God created something.

And got out of control us. We are now concerned that our creations may get out of control, and I suppose in particular, one major concern is autonomous or self-guided weapons, and, and that’s a huge ethical field. Here’s a, a man sitting in a trailer in the Nevada desert and he’s controlling a drone in the Middle East and it.

Fires a rocket, uh, and destroys a group of people. And of course he just sees a puff of [00:30:00] smoke on his screen and that’s it. Done. And there’s huge distance between the operation of that lethal mechanism. And we only go up one more from that where these lethal flying. Bombs, so to speak, control themselves.

We got swarming drones and we got all kinds of stuff. Who’s gonna police that? And of course, every country wants them because they want them to have, uh, a military, uh, advantage. So we trying to police that and to get international agreement, which some people are trying to do now. I don’t think we must be too negative about this, and I’m cautious here, but we did manage, at least temporarily, who knows what’s gonna happen now to get nuclear weapons at least controlled and partly banned.

So some success, but whether with what’s happening in Ukraine at the moment with Putin and so on, whether he’s gone. He could shoot a nuclear tactical weapon, or it could be controlled autonomously. Make its own decision. Yeah. But, and then where do we go from there? And these things are exercising people at a much lower level, but it’s still the same.

How do you write an ethical program for self-driving cars? Yeah.

John Anderson: So that if there’s an accident, it can’t be avoided.

John Lennox: Yes. Do you, which, where, what do you knock down to say it’s the switch tracks dilemma. Yeah. Again, um, that you put before ethical. Students of ethics, and it’s very interesting to see how people respond.

The switch track’s dilemma is simply that you have a train hurtling down a track, and there’s a points, and it can be directed down the left hand of the right hand side. Down the left-hand side, there’s a crowd of children stranded in a bus. On the track, on the right hand side, there’s a dope man sitting in his cart with a donkey.

And you are holding the, the lever, do you direct the train to hit the children or the old man? That kind of thing. But we’re faced with that all the time and it, it’s hugely difficult without going near agi yet. Yet.

The Dangers of Artificial General Intelligence (AGI)?John Anderson: And let’s, let’s come to agi. Yeah. What is Agi and. Because up until now we’re talking about intelligence.

That’s not human. It can’t make judgements, it can’t switch tasks, it can’t multitask. It can just be built up to do an enormous one thing. One thing, even though that might be massively intrusive, as we’ve talked about with surveillance technology, correct. But now we’re talking about something different together.

General. Yes, we are. Artificial general intelligence means,

John Lennox: well, it means several things. The rough idea is to have a system that can do everything and more that human intelligence can do, do it better, do it faster, and so on. A kind of superhuman in intelligence, which you could think of possibly as. At least in its initial stages being built up out of a whole lot of separate narrow AI systems, building them up.

And that will surely be done to a large extent, but research on a g I and of course it’s the stuff of dreams, it’s the stuff of science fiction. So people absolutely love it and interest in it moves into. Very distinct directions. There’s, first of all, the attempt to build machines to do it that is, that are based on silicon, computer, plastic, metal, all that kind of stuff.

And then there is the idea of taking existing human beings and enhancing them. With bioengineering, drugs, all that kind of thing. Even incorporating various aspects of technology so that you’re making a cyborg, cybernetic organism, a combination of biology and technology. To move into the future so that we move beyond the human.

And this is where the idea of transhumanism comes in, moving beyond the humans. And of course, the view is of many people that humans are just a stage in the gradual, uh, evolution of, of biological organisms that have. Developed according to no particular direction through, um, the blind forces of [00:35:00] nature.

But now we have intelligence so we can take that into our own hands and begin to reshape the generations to come and make them according to our specifications. Now that use raises huge questions. The first one is, of course, as to. Identity, what are these things going to be and who am I in that kind of, uh, situation?

Now, a g i I mentioned is something that science fiction deals with a lot. The reason I take it seriously is it’s not only science fiction writers that take it seriously. For example, one of our top scientists. Possibly the top scientist, uh, who is our, uh, astronomer, Royal Lord Martin Reese. He, uh, takes this very seriously, says in some generations, hence, um, We might effectively merge with technology.

Now that idea of humans merging with technology is, again, very much in science fiction, but the fact that some scientists. Are taking it seriously means in the end that the general public are going to be filled with these ideas, speculative of the one hand, but serious scientists espousing them on the other so that we need to be prepared and get people thinking about them, which is why I wrote my book and.

In particular in that book, I engaged, not with scientists, but with the historian Yuval Nora. Yeah. And Israeli historian.

John Anderson: Can I interrupt for a moment? To quote something that he said. Yes, sure. Just to frame this so beautifully, he actually said this because I’m glad you’ve come to him.

We humans should get used to the idea that we’re no longer mysterious souls. We’re now hackable. Animals. Everybody knows what being hacked means now, and once you can hack something, you can usually also engineer it. Mm-hmm. I just put that in for our, our listeners as you go on with your interactions

John Lennox: to us, man, that that’s a typical Hara remark.

And he wrote two major bestselling books, one called Sapiens Homo Homosapiens, human Beings, and the Other Yes. Homo Des, and it’s with that second book that I interact a great deal because it has huge influence around the world. And what he’s talking about in that book is re-engineering human beings and producing Homo DEOs, spelled with a small day.

He says, think of Greek gods turning humans into Gods something way beyond their current capacities and so on. Now I’m very interested in that. Uh, From a philosophical and from a biblical perspective, because that idea of humans becoming gods is a very old idea, and it’s being revived in, in, in a very big way.

The 2 alarming agendas of the 21st centuryJohn Lennox: Now, to make it precise, uh, or more precise, Harari sees that 21st century as having two major agendas according to him. The first is to. As he puts it to solve the technical problem of physical death so that people may live forever, they can die, but they don’t have to. And he says Technical problems of technical solutions, and that’s where we are with physical deaths.

That’s number one. The second agenda item. Is to massively enhance human happiness. Humans want to be happy, so we got to do that. How are we going to do that? Re-engineering them from ground up, genetically every other way, drugs, et cetera, et cetera, all kinds of different ways. Adding technology, implants, all kinds of things until we move the humans from the animal stage, which he believes happened.

Through no plan or guidance. We with our superior brainpower, we’ll turn them into Superhumans, we’ll turn them into little gods. And of course then comes the massive range of speculation. If we do that, will they eventually take over? And so on, so forth. So that is transhumanism connected with artificial intelligence.

Humanity’s desire for immortalityJohn Lennox: So, Connected with the idea of the superhuman, and people love the idea. [00:40:00] And you probably know there are people, particularly in the USA, who’ve had their brains frozen after death. They hope that one day they’re gonna be able to upload their contents onto some silicon based thing that will endure forever, and that will give them some sense of immortality.

Now if you notice those two things, John, Solving the problem of physical death, re-engineering humans to become little gods that has all to do with wanting immortality. Yeah. And as a Christian, I have a great deal to say about that because what’s happening, I believe in the transhumanist, the desire for that is a parody on what Christianity actually is all about, doesn’t it?

To some extent

John Anderson: To some extent though, reflect that. I think the very great majority of us are conscious that deep down, we don’t wanna think we’ll come to an end. Oh no, we don’t. I, I’m an individual who actually has no great aspiration to live. To an advanced old age?

John Lennox: Well, I’m the same. Um, frankly, um, I don’t want not on this situation.

John Anderson: No, no. Um, not to say I don’t enjoy life doesn’t mean that at all. No. Just means I don’t aspire at a great physical old age. Yes. Frailty and what have you. Um, and I have a different perspective on what happens after that, but deep down, I don’t want to think it ends. No. With that physical death, and I think that’s, Pretty much hotwired into all of us.

John Lennox: I think it’s hardwired and that’s important. Uh, this business of what’s hardwired into human beings versus what oh one, so to speak, I think is vastly important. Many years ago I came across that idea in the moral sense, CS Lewis talking about. In his book, and it’s relevant to what we’re talking about at the moment.

The abolition of man is an appendix at the end where he points out that all around the world look at every culture. They may differ, but they’ve got certain moral rules in common. It looks as if morality is hardwired. I believe it is by a benevolent creator. But now we come, we come up to this and, uh, we see that there’s hard wiring again.

Uh, this particular level, God has set eternity in the human heart. Now, of course, that’s a theistic. Perspective, but if you take the atheistic take on it, then you gotta explain where it comes from. And again, I found CS Lewis as always right on the money, so to speak. He, he makes the point, and I’m gonna paraphrase it slightly.

It would be very strange to find yourself in a world where you got thirsty and there was no such thing as water. Yeah. Now. I think that’s a very powerful thing that Longing and CS Lewis has written a great deal about it. Brilliant essay called The Weight of Glory that longing for another world implies that these are not his words, but they’re his sentiments that we were actually made for another world.

No. I feel that the Transhuman quest is an expression of the fact that we’re hardwired with a longing for something transcendent and it’s trying to fulfill it. And I have reason for thinking it won’t do that, but you may want to ask about that later. Uh,

What is true faith?John Anderson: well, I think we’re probably coming into land. The, the, the thing that I wanted to explore with you for a moment is that, uh, I think that a lot of people are at the point where they don’t, it’s, it requires a lot of energy, quite a bit of anguish to say, I’m gonna make some tough decisions about what I really believe.

And it seems to me that this whole area of artificial intelligence and the chance that we may reach the capacity to literally destroy ourselves requires us to think long and hard. And to make judgements that will have to be based, if you like, on faith. You can’t know exactly what’s going to happen. So you see, if you want to say, well, it requires a lot of faith to believe in that.

Think through whether I believe in a God. I would’ve thought this whole area presents just as great a challenge. Who am I? How am I gonna work this out? Do I put some ethical framework down, or do I just sit in the pot and let the water boil, gradually boil until it’s too late?

John Lennox: Yes. I think this is a very important issue we’ve come to.

There’s such confusion in the world about what faith is, and that’s mainly the fault, and I would say the fault of people like Dawkins and Hitchens who actually didn’t know what they were talking about because they redefined faith actually as a religious word. That [00:45:00] means believing where there’s no evidence, and what they fail to see is that’s a definition of blind faith that only a fool will get involved with.

The word faith from in English, from the Latin fee days from which we get fidelity, which conveys the whole idea of trustworthiness. And trustworthiness comes from having a backup in terms of evidence. A bank manager will only have faith in you if you prove you’ve got the. Collateral, you have to bring the evidence.

We’d be foolish to trust people without evidence. So evidence-based faith is something everyone understands, but they don’t realize is that it’s essential to science and it’s essential to a genuine. Christian faith in God. I, I get leery these days, John, of using the word faith on its own. Yeah. Because people think you’re talking about religion.

Sometimes they say to me, will you give a talk on faith? And science. I say, do you want me to talk about God? Oh, yes. Well, I says, not your title. I could talk about faith in science without even mentioning God, because scientists have got a basic credo, things they believe they’ve got a. Believe that the science can be done.

They’ve gotta believe that the universe is rationally intelligible. That is their faith, and no scientist could be imagined without it, as Einstein once said, so if you want to talk about faith, As faith in God, please call it faith in God or else we’re gonna get very confused. Now, coming back to this, you are absolutely right.

This is going to force us whether we like it or not, to do some hard thinking and to reins, inspect, and recalibrate our worldview. Because our attitude to these things depends on our worldview, our set of answers to the big questions of life. What is reality? Who am I? What’s gonna happen after death? And all those kind of things.

They’re coming out in this area. We’re being forced to think about them. And as you say, we can sit like the toad in the kettle when the water’s boiling and pretend that nothing’s happening, but we can’t afford that. That isn’t a luxury, that’s suicidal. And the trouble is there is a book called The Suicide of the West, where we’re just not thinking enough.

And I, I feel, and I know you’re doing this, and I feel called to do it too, to. To put issues out into the public space so that people can really see that they can think about them and they can come to conclusions about them. And as you say, we’re, we’re, we’re really landing this discussion and it seems to me that.

Focusing on what’s going on. I read Harari and I read other books like this, and I say, you know, I can understand what you’re looking for. You’re looking for something that’s very deep and hardwired in us, but, and I make people smile sometimes when I meet these people transhumanists, and I say, guys, I respect what you’re after, but you’re too late.

And they say, what? Too late. Of course, we’re not too late. I say, you actually are too late. Take your two problems. One physical death. I said, now I believe there’s powerful evidence that that was solved 20 centuries ago. It was actually solved before that, but 20 centuries ago there was a resurrection in Jerusalem.

We celebrated at Easter. We’re just after Easter now, and as a scientist, I believe it for various reasons that we can discuss, but the point is that if Jesus Christ, Broke the death barrier that puts everything in a different light. Why? Because it affects you and me. How does it affect you and me? Because if that is the case, then we need to recalibrate and take seriously his claim to be God, become human.

I said, isn’t that interesting? What are you trying to do? You’re trying to turn humans into god’s. The Christian message goes in the exact opposite direction. It tells us of a God who became human. Do you notice the difference? And of course that actually gets people fascinated. I say, you are actually taking seriously.

The idea that humans can turn themselves into gods by technology and so on. Why won’t you take seriously the idea that there is a God who became human? Is that any more difficult to do? And once you’ve got that, then I think [00:50:00] arguably you need to take seriously what Jesus says and what he says is, and that is the Christian message He.

Has God become human in order to do what? To give us his life? If you like to turn us into what you want to be, because the amazing thing about this is that the central message of the Christian faith to you and me is the answer to the transhumanist dream. One Christ promises. Eternal life. That is life that will never cease.

And it begins now, not in some mystical, transhuman uncertain future, but right now. Secondly, because he rose from the dead, he promises. That we will one day be raised from the dead to live with him in another transcendent realm that’s perhaps even more real, probably more real is more real than this one, and that’s going to be the biggest uploading ever.

You see, so your hope for the future of humanity, changing human beings into something more desirable, living forever and happier, all of that is offered. But the difference between the two is radical, because firstly, your idea is using human intelligence to turn humans into Gods, bypassing the problem of moral evil.

You’re never going to do it. No utopia has ever been built, and of course you’re not thinking straight because there have been attempts to re-engineer humanity, crude, of course, the Nazi program of eugenics, the Soviet attempts to make a new man. And what do they lead to? Rivers of blood, 20th century being the bloodiest century in history.

Mind you, what’s happening now might make this a very bloody century, but what I’m saying, John, is that I believe even more strongly than ever that we’ve got as Christians, a brilliant answer. And a message to speak into this that crosses all the boxes, but it means facing moral reality, which is exactly at the heart of the scariness with which some people approach these issues.

ConclusionJohn Anderson: John, I think we should land the plane there. You couldn’t more clearly articulate the reality of the changes before, the challenges before us, and the need for people to get off the fence and not allow themselves to be satiated. By false comfort, the world doesn’t give us that option anymore, in my view.

If we don’t make decisions now, individually and corporately, we’re sunk. I don’t want to subtract or add to that remarkable overview of what we’re facing. So I’ll land the plane and thank you very much indeed.

John Lennox: Happy landing.